Home

Welcome to the home page. Below you will find a basic and simplified description of visual servoing. For those wanting more technical details, navigate to the “Visual Servoing Methods” page above.

Welcome to the home page. Below you will find a basic and simplified description of visual servoing. For those wanting more technical details, navigate to the “Visual Servoing Methods” page above.

What is visual servoing?

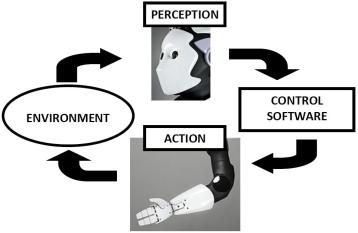

Visual servoing is a term used to describe a closed-loop control of a mechanism (often a robot) by using vision sensors (i.e. cameras). It gives a very accurate positioning result even with bad calibration of the system. It should not be confused with an open-loop control using vision. This difference is further explained in the next section. But now, to better explain the system, a simple diagram is shown below:

As the illustration above shows, the robot perceives the environment with its vision sensors (i.e. cameras in the “eyes”). Using this information, the control software produces a suitable action to be done in the environment (i.e. moving the “hand” to a specific location). Here, action pertains to the low-level control of the robot joints. Visual servoing is used to do the “low-level” control of the robot, producing joint motions as an output (also moving the end effector/hand as a result). It should not be confused with “high-level” actions such as making a sandwich. Although, these high-level actions are always decomposed into the low-level positioning tasks for each robot joint (the abstraction level differs in different robots, but still somewhere inside the actions translate to motor commands for the joints). In the illustration, visual servoing itself is represented by the control software. As previously mentioned, another form of control (open-loop) is possible. The difference to visual servoing is summarized in the table below:

| Open-Loop Vision-Based Control | Visual Servoing (closed loop) | |

| Perception Output | Desired location | Desired location Current location |

| Motion Based on | Desired location from vision | Error perceived by vision |

| Type of Calibration Needed | Very accurate calibration of both camera and mechanical links, often a calibration designed to do both at the same time | Only a coarse calibration of the camera and mechanical links |

As shown above, there are 3 main differences with visual servoing and the open-loop control method. For the open-loop method the vision system and robotic arm are treated separately. The link between the two is the information of where the robotics arm is supposed to move to. The problem with this is that if there are any errors in calibration (of the robot or vision system), then the robot will often not reach the goal precisely. For example, the vision system has errors in relaying exactly where the desired location is. Another example is with the mechanical construction of the robot, each part has a mechanical tolerance. This problem becomes worse with more mechanical links involved. Several more sources of error are possible, some of which can not be solved with calibration. A particular example is when 1 mechanical joint is loose (has a large tolerance). In some cases, having very precise calibration of the camera and very precise mechanical manufacturing can still produce a system capable of good accuracy even with open-loop control. Another method that is being recently developed is a combined calibration procedure for both the robot’s mechanical links and the camera (for example this is used in the PR2). This can give precision that is good enough, however some mechanical part deficiencies are still unable to be taken into account.

As opposed to the open-loop method, visual servoing uses vision information to “close” the loop. This means not only does it use vision to select the goal position, vision is also used to detect the current position of the robot. Using these 2 pieces of information from vision, an “error term” is computed:

error = Desired position – Current position

A very important thing is done with this very simple computation of the error. With this, the calibration errors which appear both in the desired position and current position are cancelled out. Hence, if the controller acts based on the error rather than on the directly obtained vision information, it can become robust to any calibration errors. Although calibration still gives improvements into the trajectory (path) the robot takes, the end precision with visual servoing is generally not affected by calibration errors.

This simple concept of controlling the robot based on the perceived error of the desired and current positions is really the main idea behind visual servoing.

Throughout the years, a lot of research has gone into this, and it is still an active area of research with a lot of open problems still to be considered. The main application of visual servoing is precision positioning of a robotic arm given the desired location. However this simple task is crucial in several higher level tasks. One such task is grasping an object. In an industrial setting where the environment can be made favorable to the robot, grasping an object can become quite trivial. The robot can be fixed with good precision, similarly the object to be grasped can be precisely located as well. Having this, vision isn’t even needed for a robot to move to a predefined location and grasp an object. This approach has worked very well in industry. However, consider a humanoid robot to be used outside a controlled environment. It wants for example to move to the kitchen, find and grab a box of cookies somewhere on the table. This scenario introduces a lot of possibility for errors that is hard for an open-loop controller to cope with.

The object grasping scenario is also the main application case that is being targeted for my masters thesis work. A rough outline to the possible grasping workflow and where visual servoing will fit in is listed below:

- Detect the object to be grasped

- Move the whole robot to a location close enough to grasp the object

- Move the hand and head such that both the object and hand are in view

- Visually servo the hand to a pre-grasp location

- Grasp the object

This makes full use of the advantage of visual servoing to being accurate even in the presence of uncertainty and errors.

If you are interested in more technical information, navigate to my other pages:

- Background Theory – provides a collection and brief description of the essential theories in computer vision and robotics from which visual servoing is derived.

- Visual Servoing Methods – provides a concise description of the different methods available in visual servoing and how they are to be implemented

- Results – shows some of the results I have from simulation and real experiments with PAL Robotics’ REEM